For quite a long time Google Cloud has been neglected to be taken seriously especially within enterprise clients. But when looking at the development right now, it seems that Google has been playing a very long-term strategy aiming to become the winner on their own terms. Additionally, from a Nordic perspective, as Amazon is entering the market with its fulfilment e-commerce offering, Top Management should start looking at Google very closely to adjust for a new competitive landscape that is affecting both the online and physical world.

Having followed the cloud industry development for some years, it seems obvious that Google is fundamentally changing the rules of the game. When you can’t compete on the amount of data centres and infrastructure, why even bother to play within the same field. Strategically, Google is changing the rules to its advantage using its competitive advantages in Machine Learning capabilities and its roots within data & analytics. We strongly believe and are thoroughly happy to witness a paradigm shift not only in cloud computing but also the democratisation of Machine Learning from academic laboratories into everyday use even for non-technical marketers or business designers.

The Covid-19 crisis has put Public Cloud into every company’s agenda due to the virtualisation of processes and the workforce

Looking at research and survey data, enterprises across Europe are still at a very early stage in their cloud transformation journeys and the actual growth of Public Cloud usage is still coming in the upcoming years. This means practically that we have so far seen only a first glimpse into what cloud computing has to offer either for businesses or consumers. Even the most conservative industries like banking, insurance, healthcare and public services are in the middle of moving their data and processes into the cloud. Besides looking for efficiency gains most of these industries are disrupted by new market entrants operating cloud natively right from the beginning, having the advantage of not carrying the burden of legacy technologies or being in between two worlds, which is the nightmare of every CEO. It’s only a matter of time before on-premise infrastructure will be of marginal relevance.

So far development and competition has been clearly led by Amazon

Amazon has been the leader in the Public Cloud space since they popularised cloud computing by launching Amazon Web Services back in 2006. The breadth and depth of their offering is obviously great. However, Amazon has also been criticised for their hybrid offering and companies competing with Amazon’s eCommerce business have understandably been cautious of Amazon’s cloud offering. It’s little wonder that those running a retail or e-commerce business storing and computing their most valuable data on Amazon cloud (AWS) are having serious second thoughts, especially in the Nordics where Amazon is currently expanding with local delivery and service chains. Microsoft holds the second position – measured by global market share, and Microsoft’s advantage is their strong foothold within corporate IT departments. Microsoft cloud (Azure) is a natural choice for many enterprises who are already heavily relying on Microsoft technologies. Google holds the third position and they’ve been somewhat lagging behind, partially because of a late start in commercialising their cloud solutions. Although Google has been building and using cloud solutions internally for a long time, it took time before these were opened up for customers outside Google.

We think that things are about to change, although there might still be a long road ahead. Google has been criticised for not taking the needs of enterprise customers seriously enough, but their recent customer wins such as Deutsche Bank, HSBC, Telefónica and Renault prove that Google’s attitude towards enterprise customers has changed. Their way of building 10-year collaboration deals with leading enterprises shows their serious commitment to staying in the business over the long term.

Smart bets on technology development enable Google to take advantage of their competitors’ infrastructure

The seeds for the long-term strategy of Google were planted already last decade with the development of Kubernetes technology, which enables the running of containerized applications easily across on-premise and cloud infrastructure. The outcomes of the selected approach and technology platform were made openly available across the cloud industry and have also been widely adopted as a native part of the offering of competitors such as Microsoft and Amazon. Already then, Google imagined that containerization and the separation of data storage from computation opens long-term strategic options and fosters open innovation. It’s worth highlighting that openness inevitably increases the speed of development from an industry or ecosystem perspective.

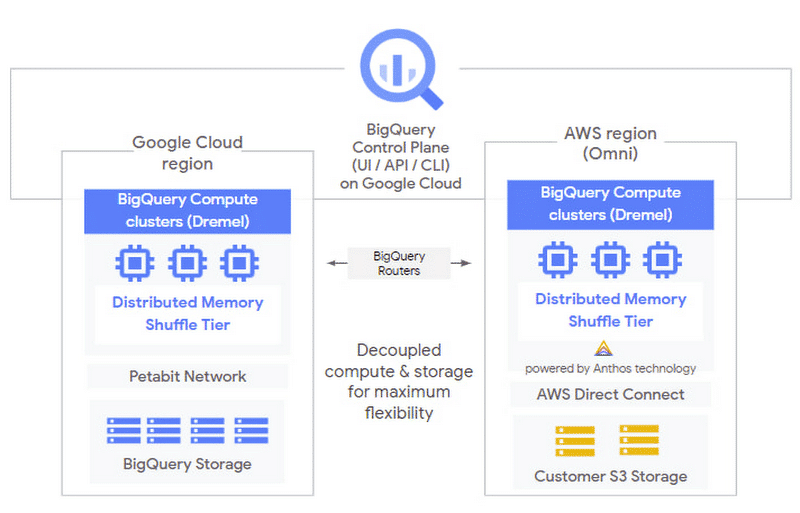

This is already ancient history, but visible signs of change started with first introducing Anthos in the 2019 Google Next yearly event. Anthos is a Google Cloud solution, built on the foundation of Kubernetes, that enables you to run your applications anywhere – on-prem, Google Cloud or even Amazon and Microsoft cloud. This summer Google took the next step with publications especially around BigQuery Omni at Google on Air online series of events. In our opinion, BigQuery has always been at the centre of the Google Cloud platform. This was made clear by at the same time decreasing the market potential for traditional Public Cloud services from a Google perspective, while at the same time exploiting the addressable market for Big Query (Omni). The groundbreaking innovation of BigQuery Omni is that via Anthos and BigQuery architecture, that separates the data storage from computation, you can soon run BigQuery on both Amazon and Microsoft infrastructure without moving data around. You don’t need to be an evangelist to see Google expanding that logic for a major part of their cloud applications in the coming years.

*Google BigQuery Omni architecture with the ability to run BigQuery on Amazon cloud (AWS). According to Google, the ability to run BigQuery on Microsoft cloud is also on the way.

To understand the full context, let’s look at the guiding principles of Google Cloud development especially in BigQuery. These principles are openness, managed services and serverless. Since these guiding principles are so fundamental it’s worth explaining their implications in detail. The definitions of these concepts are not always unambiguous so different vendors use slightly different definitions. Here’s our view on what these mean:

1. Openness:

Openness can be seen in two ways. Firstly, it means that you’re not tied to a single vendor or provider. This is what Google firmly believes. So, for example you can run different applications in different clouds and it’s up to you to choose the mix that best fits your needs. BigQuery Omni is a concrete example of this. If you happen to be heavily dependent on Amazon cloud, you don’t have to migrate your applications and data into Google Cloud before you can start benefiting from BigQuery. Instead, you’ll soon be able to run BigQuery in Amazon cloud next to your existing applications and data. This is openness in practice.

Secondly, openness is also closely linked to Open Source. For example, Tensorflow (Machine Learning library) and Kubernetes are services originally invented by Google but have now been opened to the open-source community. So, for example, Kubernetes is now also available in Amazon and Microsoft clouds but development is still heavily led by Google. Google is still the largest contributor to the Kubernetes project and funds the infrastructure for Kubernetes development. In general, Google is one of the largest open-source contributors together with Microsoft, although Amazon’s contribution is much smaller. (https://solutionshub.epam.com/osci)

2. Managed services:

Managed services mean that Google manages and maintains the infrastructure for you; so, for example, you don’t have to worry about security patches, version updates, high availability and redundancy configurations. However, you still need to choose the right infrastructure size for your services. For example with Google Cloud SQL, before you can start using the service, you need to create an instance, define how many CPU cores you need, what is the disk size and configure back-up settings. Thus, you need to plan your workloads and needs in advance and also focus on cost optimisation. If you don’t fully utilise the infrastructure that you provisioned you need to decrease its size or even to shut it down if you don’t need it at all. This is definitely a lot easier than working directly with virtual machines (IaaS) but not as easy as it could and should be.

3. Serverless:

Serverless is also managed but managed service is not always serverless. Of course, the service is powered by servers behind the scenes, but with serverless offerings, these are completely abstracted away. So, you don’t need to configure the CPU cores and disk sizes and you can just start using the service without provisioning it at first. For example, to use BigQuery for the first time you just need a Google Cloud user account to configure the payment details and then you can start writing your SQL against public data sources provided by Google.

Serverless also means that the scalability that you have is superb. You can use as much capacity as offered and allowed by Google. So, for example, you’re not limited by the number of CPU cores you selected initially. In addition, in most cases, serverless also means that the pricing model is different, i.e., “pay by use”. If a service is not serverless and you have to provision the infrastructure, this usually means that certain costs are running 24/7 and are based on the selected infrastructure size. With serverless, you usually pay per usage. And if you don’t use the service for a certain time you pay nothing. With Google Cloud Functions, for example, the pricing model is mainly based on the number of function calls and how long they run. With BigQuery it’s mainly based on how much data you store and the amount of data processed by your queries (fixed price option for query pricing is also available). This is fair and allows you to start small with minimal costs. And when your usage increases the service will scale automatically. Thus, always select serverless where possible because this allows you to focus on your business.

In contrast to putting data centres all over the continents you can start to see some specialisation

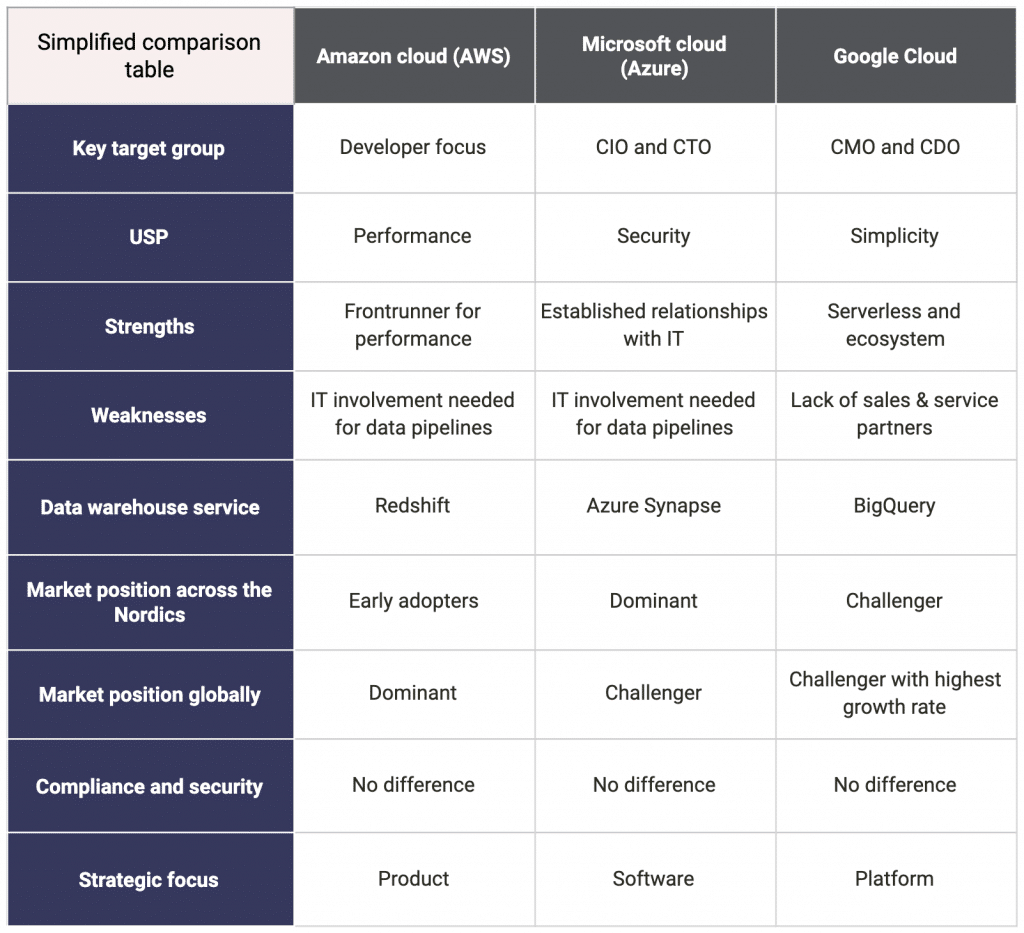

For the past years, there have been no remarkable differences in offering across the three big players in the Public Cloud field, but now you can see clear specialisation emerging. It is difficult to compare the Public Cloud offerings of Amazon, Microsoft and Google. From a high-level perspective, their compute, storage and networking solutions are similar and a detailed comparison of their service offerings is almost impossible due to the following facts. The speed at which these companies are developing their offerings is breathtaking, so the results of your comparison might be out of date before you’ve finalised the implementation project. Also, comparing prices is really difficult without knowing the exact workloads, which is usually impossible if you’re building something novel. For high-level simplified comparison, we have put together a table of the three big Public Cloud vendors and described their differences with a bullet point.

One striking perspective to Google’s Cloud approach came around two years ago when they began to address the enterprise market more seriously. Thomas Kurian took over CEO responsibility for the Google Cloud business area, having come from Oracle, with deep roots in dealing with enterprise clients. Already then, it was clear that Google seemed to be solving a rather different business problem than their peers. Whereas Amazon was striving for best performance, and Microsoft was trying to move their Office and productivity offering over to the cloud, Google stated that there would be an inevitable and massive global shortfall of skilled people able to work hands-on with data, analytics and especially Machine Learning, or something that in the future might look like Artificial Intelligence. Instead of merely bringing legacy systems and workloads to the cloud for efficiency reasons, the overall mission of Google was to democratise the utilisation of data and Machine Learning.

*Toolbox for simplified Public Cloud vendor comparison

Two important factors when selecting Public Cloud vendors

- What are your specific needs and requirements?

- What will your capabilities to address these needs be in 12 months?

Instead of high-level sales pitches or Gartner Magic Quadrants, you should focus on the following two factors:

- What are the specific needs and requirements of importance for your business? For example, the areas that provide competitive advantage and marketing differentiation to your business might have some specific requirements. Whereas in some other areas a market standard service works fine.

- What are the capabilities that need to be addressed in 12 months and how do you anticipate how the different cloud vendors offerings will look in the future?

Foreseeing the future is, of course, difficult, but knowing something about Amazon, Google and Microsoft history, strategy & vision, and recent focus areas might help you in these predictions. For example, Google’s mission statement “to organise the world’s information and make it universally accessible and useful”; their restless focus on information and data gathering; and the recent BigQuery Omni release, make it quite easy to guess that more of the BigQuery Omni type of services running on Amazon and Microsoft clouds will be launched in the near future, and these should be related to areas where Google Cloud shines, i.e., services related to data and AI/ML. Surprises will happen, but those just make it so interesting to work with Public Clouds.

From a sales & marketing perspective, you should look into these capabilities and applications

Marketers and sales professionals should keep in mind that Google is inherently a different company than Amazon or Microsoft. Marketing and advertising are deeply rooted in Google’s DNA, and in 2019 more than 80% of Google’s revenue came from advertising.

All this can also be seen in Google’s cloud offering. For example, Google’s BigQuery data warehouse has out-of-the-box integrations with Google’s marketing and advertising tools, and the marketplace contains a wide selection of 3rd party integrations. Combining this with the fact that BigQuery is the most popular Public Cloud data warehouse; BigQuery’s serverless nature making it easy to use for non-technical persons, and Google’s leading-edge in AI/ML capabilities, makes BigQuery the perfect choice for building a marketing data warehouse.

Currently, Google has nine different products, with over one billion users spread globally. This provides a rather unique platform for utilising Machine Learning to create superior customer experiences and to learn from user interaction in order to further enhance Google Machine Learning capabilities.

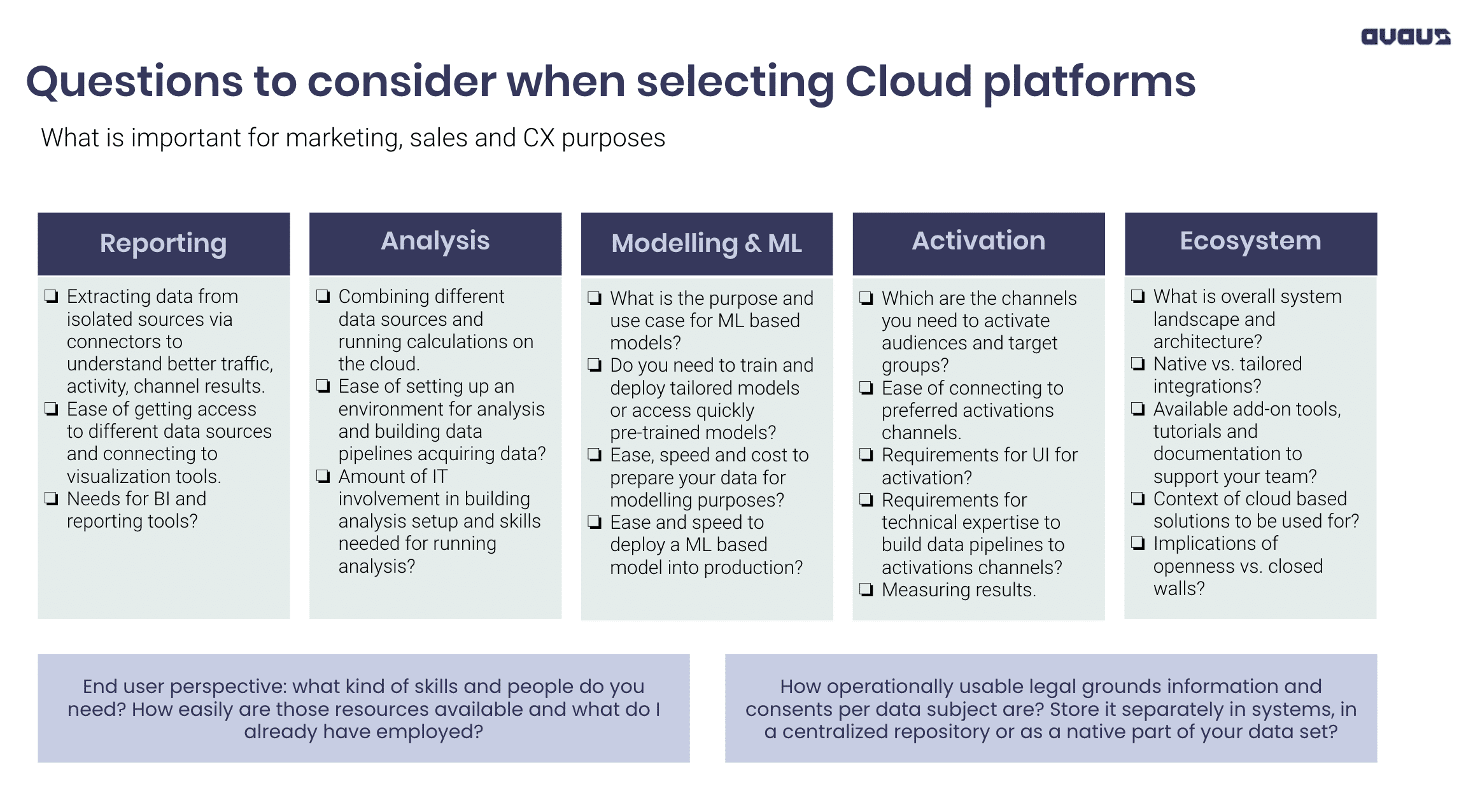

Specific questions to consider when selecting cloud platforms for marketing, sales and customer experience optimisation purposes

We have gathered a purpose-specific list of questions to ask when selecting a cloud platform for sales & marketing use cases. We would like to help you out in a complex selection process which can be nailed down to a few key points covering areas of reporting, analytics, modelling & Machine Learning, activation of data – looking at the whole ecosystem.

*Toolbox for CMOs and CDOs for cloud platform selection

Multi and Hybrid Cloud environments will be the new normal

For clarification, it’s good to define exactly what is meant by both Hybrid and Multi-Cloud environments. Hybrid Cloud combines data across on-premises data platforms and Public Cloud, whereas in a Multi-Cloud setup a given company is using multiple Public Cloud platforms like Google Cloud and Microsoft cloud for different purposes or even in parallel, mixing workloads across them.

In recent surveys, the majority of Public Cloud users responded that they are working with two or more providers. Our strong hypothesis is that enterprises will slowly adapt, first to a Hybrid Cloud setup which is already rather common, but over time to a Multi-Cloud environment driven by both practical business requirements serving different purposes, with specific solutions from different cloud vendors coupled with the ease of operating between multiple clouds. Although this will put enterprise cloud operation and it-security teams to the test in adapting to apply similar governance and security measures using efficient and scalable workflows to handle multiple cloud platforms with the same standards.

It will be extremely interesting to follow and to operate in an environment where the context of speed has increased more than a human is able to handle, and new opportunities arise with almost daily new announcements. We at Avaus will keep following the developments and focus on turning data into results faster and better than anyone else.

Avaus Marketing Innovations has lately become a Google Cloud partner focusing on delivering Data and Algo Factories mostly on Google technologies for its clients across Northern-Europe to boost sales & marketing efficiency.